Database

We present a database containing publicly available models with weights. We focus on one of the most

popular machine learning tasks, image classification, as it is also typically used to demonstrate the

effectiveness of attacks and defenses on ML models.

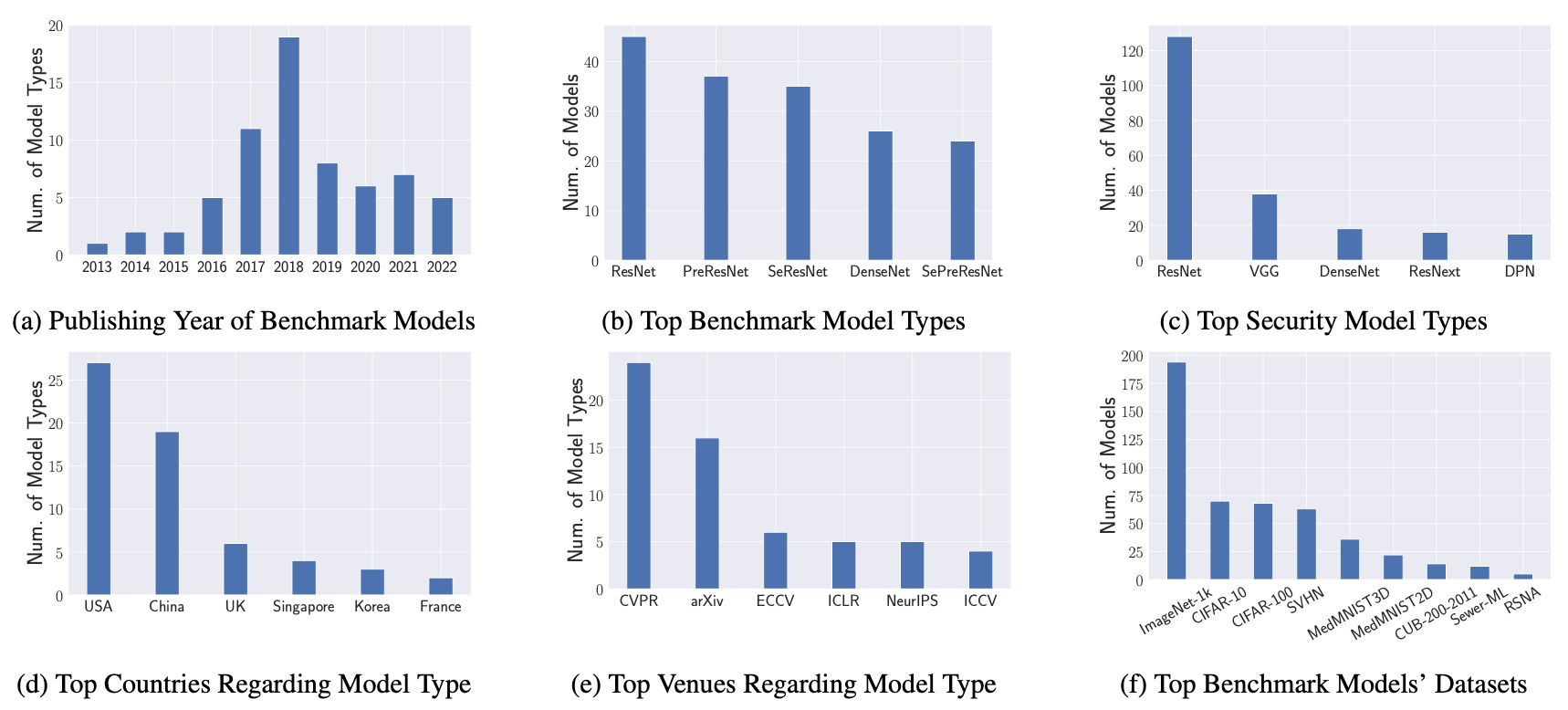

The statistics overview of the dataset is presented below.

Evaluations

Thanks to SecurityNet, we can perform an extensive evaluation for model stealing, membership inference, and backdoor detection on a large set of public models, which, to the best of our knowledge, has not been done before. Our analyses confirm some results from previous works but on a much larger scale, discover some new insights, and show some of the previous results obtained from researchers’ self-trained models can vary on public models.

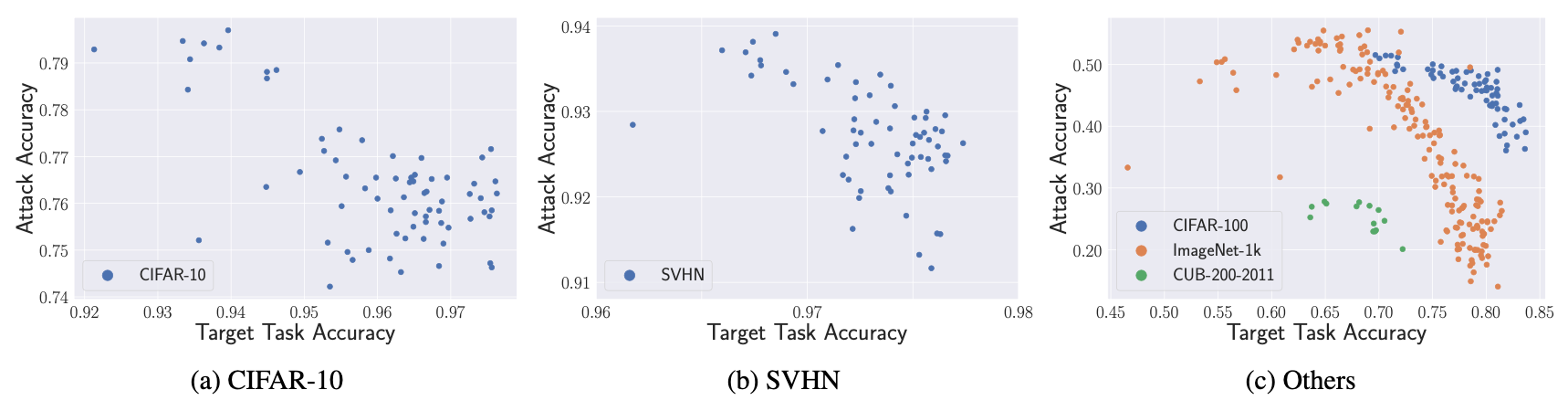

We find that the model stealing attack can perform especially poorly on certain datasets, such as CUB-200-2011, in contrast to target models (with the same architecture) trained on other datasets. Furthermore, we demonstrate that the model stealing performance negatively correlates with the model’s target task performance and is too low to be effective on some modern high-performing models.

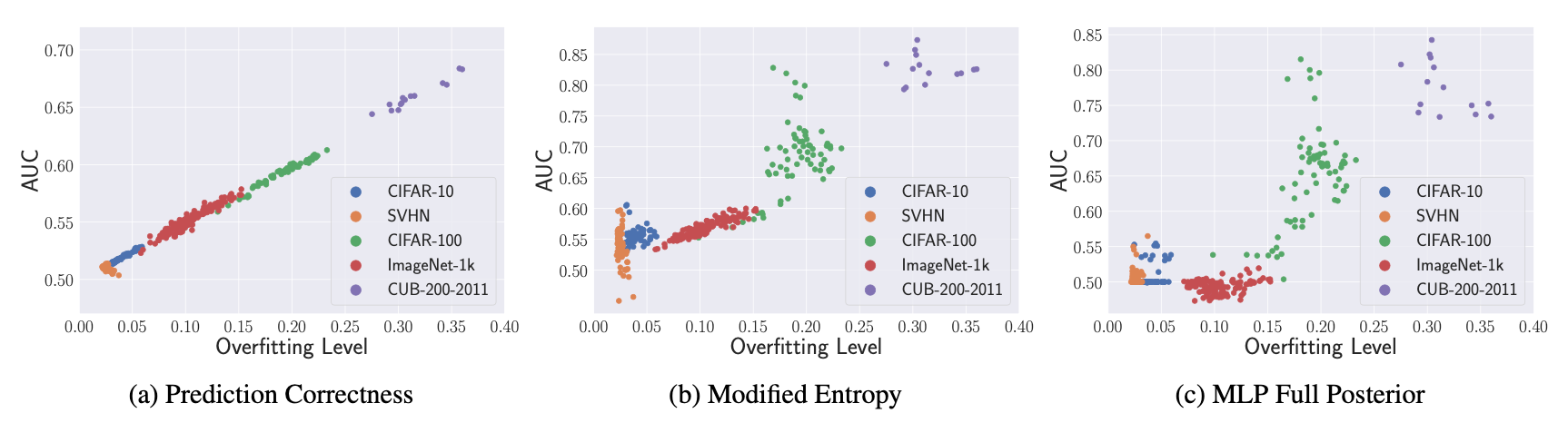

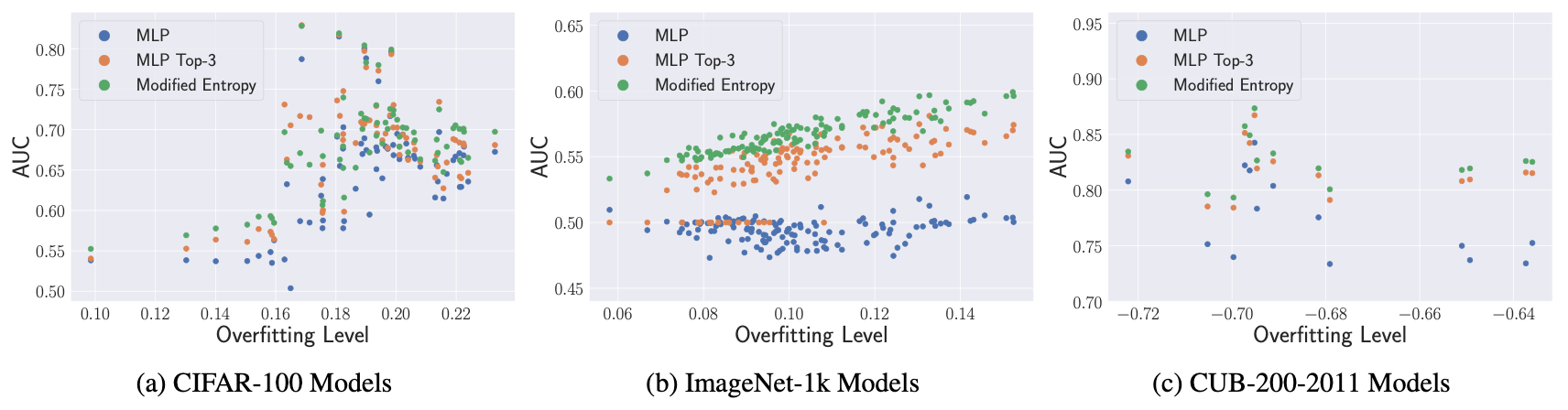

As for membership inference, we make a similar observation, as shown in previous works, that the attack performance positively correlates with the victim model’s overfit- ting level. Additionally, we find methods that perform well on experiment datasets do not guarantee similar performance on more difficult datasets. In contrast to previous work’s results, the MLP-based attack performs differently on models trained with data that contains a large number of classes (e.g., ImageNet-1k) when using different input methods.

Please refer to the paper for more detailed analysis including results on benchmark vs. security models and correlation with metadata.